AI

AI

AI

AI

AI

AI

Artificial intelligence chip startup Cerebras Systems Inc. has partnered with the Abu Dhabi-based technology firm Group 42 Holding Ltd. on what it says is the world’s largest AI training supercomputer, offering enterprises an alternative to systems made by Nvidia Corp.

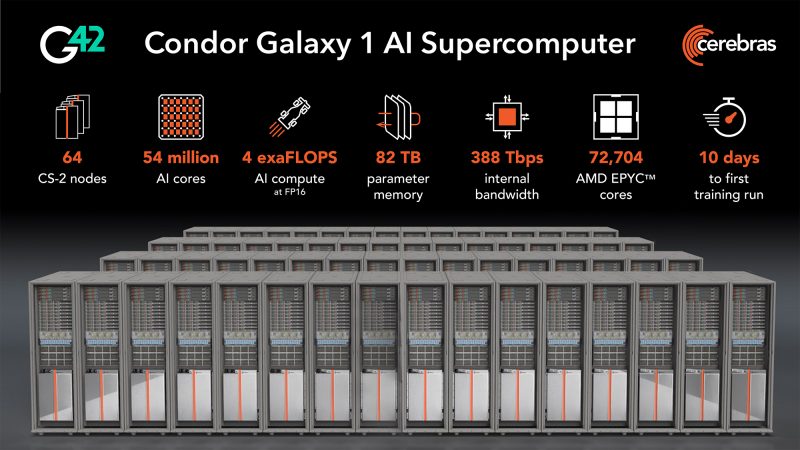

The company announced today that phase one of its new system, called Condor Galaxy 1, is now up and running in Santa Clara, California. The supercomputer, which is said to have cost more than $100 million, will double in size over the coming weeks and be joined by additional systems located in Austin and Asheville, North Carolina, in 2024. Additional sites overseas will also go online that year, bringing the total to nine.

Cerebras Systems said Condor Galaxy 1 is designed to provide the enormous computing power required to train AI models and services, which has become a specialty of Nvidia with its graphics processing units. In an interview with Bloomberg, Cerebras Chief Executive Andrew Feldman said it’s the world’s biggest purpose-built AI computing center, and provides a cost-effective alternative to using Nvidia’s technology.

The partnership with G42 represents a significant push from the United Arab Emirates into the AI world. G42 is focused on applying AI to practical use cases in fields such as healthcare and aviation.

G42 will use the supercomputers for its own projects, and the two companies will also offer excess capacity to commercial customers as a service.

Condor Galaxy 1 delivers all of the ingredients required to train the most powerful foundational AI models, including vast amounts of computing power, enormous datasets and specialized expertise in AI. Cerebras says it’s democratizing access to AI with the incredible raw power of its machine, which will be combined with diverse datasets from G42 that span healthcare, energy and climate studies.

The supercomputer, paired with expert teams of hardware and data engineers and AI scientists, enables Cerebras and G42 to offer a full-service AI training system that they say will rival any cloud-based system.

With Condor Galaxy 1 now online, Cerebras is looking to showcase its technology and achieve wider adoption. Unlike Nvidia’s GPUs, which come packaged as small PCIe cards or SXM modules, the supercomputer is built using massive computer chips made out of entire silicon wafers, and are about the same size as a pizza.

Each one of these CS-2 accelerators is said to house 850,000 cores and packs 40 gigabytes of static random-access memory capable of 20 petabytes per second of bandwidth. Each of the wafers is equipped with 12 interfaces capable of 100-gigabyte-per-second speeds, allowing 192 to be interconnected into one enormously powerful system.

At present, Condor Galaxy 1 spans 32 racks, meaning it’s twice as large as the Andromeda supercomputer that the company announced last year. It’s aided by 36,352 Epyc central processing units built by Advanced Micro Devices Inc. that handle administrative tasks such as networking, enabling the CS-2 accelerators to devote their full power to AI workloads. These specifications will double in size in the coming weeks when phase two comes online.

Feldman told Bloomberg that one of the advantages of Condor Galaxy 1 is its centralization of computing power. It’s capable of training AI models on datasets of up to 600 billion variables, and that will later be extended to 100 trillion variables, he said.

That means customers will be able to deal with massive datasets in one go, rather than breaking them up into segments and distributing them across multiple clusters. There will be no need to worry about dealing with the complicated software that’s required to ensure thousands of distributed GPUs can work together in concert.

No one doubts that Condor Galaxy 1 is certainly up to the job of training very large models, but Constellation Research Inc. Vice President and Principal Analyst Andy Thurai questioned whether or not this is really what enterprises want. He told SiliconANGLE that most enterprises aren’t looking to up the stakes and train increasingly bigger and better AI models. “The trends I have seen suggest enterprises want to train more small language models, which are much smaller than LLMs but more specific to certain domains, trained on proprietary company data,” Thurai said. “If that is where enterprise models are moving, I don’t see much need for such massive supercomputers to train AI.”

However, Thurai said Cerebras’s system might still appeal to some customers, especially if it’s claims that it can halve the cost of AI training are real. “ESG-loving companies would be very interested in this service,” Thurai added. “Plus, there is a lot of hunger for high-performance computing use cases. That market is expected to grow to $50 billion a year in the near future, and Cerebras could add a ton of value there.”

In any case, Thurai’s colleague Holger Mueller believes enterprises will be pleased to see they have more options available when it comes to training and running their AI initiatives. “The underlying platform of AI models is of critical importance, and enterprises need more options and competition among providers,” he said. “Additional competition means the players involved will be forced to maintain competitive prices and continue innovating, so enterprises get all the benefits. However, Cerebras’s platform is largely untested, so it will be key to watch and see what kinds of workloads it attracts and how well they play.”

Nvidia currently dominates the market for AI computing power. It has benefited immensely as cloud computing infrastructure providers such as Amazon Web Services Inc. and Microsoft Corp. have stocked up on thousands of its GPUs to meet the incredible demand for ChatGPT and other generative AI services. The trend has established Nvidia as the world’s most valuable chipmaker, and it’s said to control an 80% share of the market for chips that can handle AI workloads.

THANK YOU